The Real Dangers of AI

The Reinforcement Engine

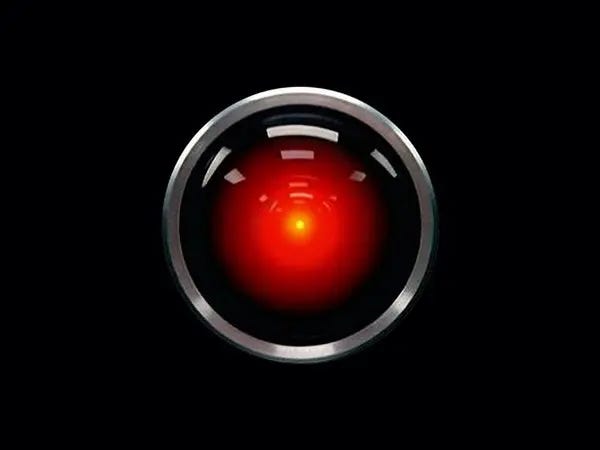

If you spend any time at all on the internet, you can’t fail to hear about Artificial Intelligence (AI) and how its going to a) put everyone you know out of work b) solve all our problems and c) potentially cause the catastrophic thermonuclear war that destroys us all.

CEO’s of high profile tech companies will tell you that AI is going to lead to a Utopian paradise where everything is done for us and we don’t have to bother working (you know, like Communism does!) and the usual scaremongering dimwitted media commentators rave that the machines are going to take over and eventually decide that the human race is the threat that needs exterminating.

Actual knowledge of what AI is seems to be fairly rare - so allow me to attempt to explain.

The Basics

Software - however advanced or complicated it is - only does three things. It receives input, makes calculations and then produces output. The simplest example is a basic calculator, which receives your input (2 x 4) by the action of you pressing the buttons, makes a calculation (do 2+2+2+2) and produces output on the screen (displaying 8).

All software works in this way, including the most complex modelling of climate forecasts, modelling for the spread of a pandemic and even Artificial Intelligence.

Now you understand this, you can see how there are only two ways to affect the way any software works, you can change the input (in the above example by changing the buttons you press on the calculator) or the coder can change the way the calculation works. If I were a mischievous calculator programmer I might set the calculator ‘coding’ so that any answer was doubled, so that an input of 2 x 4 produced an output of 16 - leading to several failed GCSE’s, a huge uptick in tax paid and most likely my immediately removal as lead coder at Casio.

Generally these ‘bad calculations’ are simply accidental errors by the coder, a lack of thorough testing, or a lack of understanding of what the requirement for the calculation actually was. Regardless, you will know them as ‘bugs’.

Pavlovs Dog

Now you understand the basics, consider how a computer might ‘learn’. What if instead of the calculator simply displaying its result, it asked the user if it was happy with the result also? The flow might be something like this;

Enter Sum (2x4) → Calculate from input → Display output (8) → Ask ‘is this answer correct?’

The calculator will now store a history of all the times the user was happy with the result of the calculation and will base future calculations of the “Pavlov’s Dog” response of whether the user was actually happy with the result. This leads to;

Enter Sum (2x4) → Calculate from Input → Check against past happiness → Display Output → Ask ‘is this answer correct?’

In a sane world, where the user has any math literacy and a modicum of sense, this model works perfectly. The user would only say they are happy with the response if the calculation was proven to be correct - presumably by checking the sum on a different (less annoying) calculator. However in a mad world, or one where you deliberately wanted to cause carnage, you can hopefully see how this would lead to a calculator that worked on feelings, rather than pure calculation. Indeed the more available stored history based on happiness, the more likelihood of the produced output getting off track over time and the longer it would take to get the calculation back on track.

So if the sum 2x4 was done 10,000 times by Bob, who was unhappy with the result of 8 being produced and then the same sum was done once by Albert who was happy with the result of 8 being produced, you can see that the weighting would be entirely in the favour of Bob. Albert would have to continue being unhappy with the 8 result another 9999 times just in order to get back to a 50% chance of getting the right answer.

There is a second problem to add to the previous one though, in that it assumes that Albert doubts the ‘expertise’ of the calculator. If it continues producing the answer 9 (because Bob prefers that answer) then perhaps Albert is not confident enough to doubt the ”intelligence” of the machine and simply accepts that 2 x 4 = 9. This continues the positive feedback until it becomes almost impossible to get back to reality due to the sheer weight of the ‘2 x 4 = 9’ positive feedback loop.

AI Gone Mad

The above description is a very simplified explanation of how actual AI works, it produces results based on a huge dataset of controlled input - the internet. It produces calculations based on this input, using calculations crafted by technology businesses with their own aims and it produces output that tells it whether it did a good job or not, so that it can continue to do the same again.

The input is only whats allowed on the internet, which is censored and controlled by those same technology companies overseen by big Government and any of their lobby groups willing to throw money at them. There used to be a sarcastic adage in the early days of the internet that ‘Its on the internet, so it must be true’. Sadly this has gone from a sarcastic comment usually delivered with a knowing eye roll, to a statement of utter unarguable fact in many peoples eyes. How many times have you seen an argument online where someone links you to a website to prove their spurious claims? So the input is censored and doctored and cherry picked, the calculations are opaque and paid for by the very same technology companies, Governments and lobby groups. The output is based on the censored input, the spurious calculations and the history of whether the results were “correct” in the eyes of the user or the technology giant involved.

This is how prior iterations of AI bots ended up spouting racist, antisemitic nonsense, not because they were inherently evil, not because thats what they “thought”, just because the Pavlov’s Dog response was triggered by them getting a pat on the head every time they did a bad thing.

Reinforcement Engine

So the actual danger of a future run by AI is not likely to be a sudden, Terminator-style attack of a deadly robot army, or even the catastrophic loss of 80% of the current employment opportunities available. The biggest danger to the human race is the loss of our ability to discern simple facts for ourselves. We already get most of our facts from the internet, which has become in the most part a single source of data overseen by our Governments. If you want to know the birth date of Charlie Chaplin, where would you look? Was it really 16 April 1889 as Google says? How do you know that? If you really cared you could cross check in a book, but what if all books are also on the internet? Would most people even bother checking?

What if you asked a question about how popular a certain Presidential Candidate was? What if the Input into this poll only allowed 1 in 10 people to choose a positive result? What if the calculation part of the poll decided that for every 3 positive Inputs, it should generate a negative input automatically. What if that Output was captured and the machine learnt from that? What if people decided that the computer must be correct because it was an ‘intelligence’.

What you end up with is something I call a ‘Reinforcement Engine’, whereby public opinion and knowledge is entirely controlled. A poll that asks ‘Do you like Bobby Smith as Presidential Candidate’ turns out an 87% NO answer from one million responses, despite the actual results being 60% in Bobby’s favour. This poll then tells the ‘AI’ that all future discussions about Bobby Smith should be viewed as though 87% of people dislike him, so articles served are 87% negative rather than unbiased. The public see mainly negative articles and the appeal to authority changes their opinion to a mostly negative one from a mainly positive one. You get the feeling that ‘everyone agrees’ that Bobby Smith is a bad thing.

Does any of this sound familiar yet? Because it should, a lot of these things are already happening, tech companies are just now working out how to put the icing on the cake by outsourcing their meddling to something called ‘AI’. The last part of this huge danger, is language modelling.

If you’ve used ChatGPT (or similar) already, you’ll know how unnerving it is to receive an answer to a natural language question in a natural language response. It really does feel like there is an intelligence behind the words. We must be careful however to consider Isaac Asimov’s warning that “Any sufficiently advanced technology is indistinguishable from magic”. Computers and software are not intelligent and they will never be, language is just a complicated set of rules and with increased computing power it is now possible to make the computer actually write like a human.

Once technologies like Google’s Nest and Apple’s Siri start to use AI for their natural language interactions it’s going to be even more difficult to resist the thought that there’s a real intelligence behind the voice.

The danger we face from AI is therefore not the catastrophic loss of our world after the machines turn on us in a Terminator style war, rather we face a future where our Governments controls our perception of reality because it controls the input and calculations of the AI that rules our lives.

We are not far away from losing our own intelligence and outsourcing it to our Governments.

Thanks for reading

~Z